T-SQL Tuesday - Assumptions, and Wisdom

08 July 2014

It’s T-SQL Tuesday today. The topic: assumptions, things that are accepted as true, without proof.

There are some great posts to read.

-

Ken Wilson (@_KenWilson blog ) writes about why we do things because “that’s the way it’s always been done”. -

Aaron Bertrand (@AaronBertrand blog ) writes about common assumptions people make about how SQL Server works. -

Boris Hristov (@boris blog ) writes about how many brilliant professionals don’t train or present because they assume something is stopping them. -

JK Wood (@sqlslacker blog ) writes about data teams that build complexity on top of complexity by assuming everything exists for a good reason. -

Mickey Stuewe (@SQLMickey blog ) writes about why it’s important to list your assumptions when starting a project. -

Sebastian Meine (@sqlity blog ) writes about the dangers of assuming your company is safe from attacks (a.k.a. “security by obscurity”) -

Rob Farley (@rob_farley blog ) writes about the danger of assuming SSIS lookup transformations work like JOINs, and how to create lookup transformation behavior using T-SQL. -

Russ Thomas ( @sql_judo blog ) writes about the dangers of assuming something isn’t your problem. -

Jeffrey Verheul (@DevJef blog ) writes about teams that assume their tests are complete, their automation never fails, and people who assume they know the answer to every question. -

Adam Mikolaj ( @SqlSandwiches blog ) writes about what happens when everyone assumes a problem is your company’s fault. -

Kenneth Fisher ( @sqlstudent144 blog ) writes about the dangers of assuming online answers are correct, and what to do to check. -

Jason Brimhall ( @sqlrnnr blog ) writes about the benefits of assuming responsibility, rather than assuming somebody else will take care of it. -

Julie Koesmarno ( @MsSQLGirl blog ) writes about ways to validate assumptions using T-SQL. -

Warwick Rudd ( @Warwick_Rudd blog ) writes about the assumptions people make when creating indexes. -

Wayne Sheffield ( @DBAWayne blog ) writes about a common ‘smart’ assumption: a table will return data in clustered index order without an ORDER BY clause. -

Cathrine Wilhelmsen ( @cathrinew blog ) writes about how assuming responsibility is a way to own, and question, assumptions. -

Vicki Harp ( @vickyharp blog ) writes about one a common gotcha: date formats and regional assumptions. -

Chris Yates ( @YatesSQL blog ) writes about the dangers of assuming your backups are good.

There is some amazing wisdom in these posts. I’d recommend reading each and every thing here. Twice. Don’t assume you already know these lessons (see what I did there?).

About T-SQL Tuesday

T-SQL Tuesday was started by Adam Machanic ( Blog | @AdamMachanic ) in 2009. It’s a monthly blog party with a rotating host, who is responsible for providing a new topic each month. In case you’ve missed a month or two, Steve Jones ( Blog | @way0utwest ) maintains a complete list for your reading enjoyment.

Thanks for blogging, and for reading!

PermalinkT-SQL Tuesday - Assumptions

01 July 2014

It’s hard to rock the boat.

It’s hard to ask the basic questions that everybody knows.

It’s hard to slow down and ask for clarification.

So, we improvise. We guess: things that are accepted as true, without proof. We often forget our assumptions, or make them instinctively.

For this T-SQL Tuesday, the topic is assumptions.

For example:

- The sun will come up tomorrow.

- Firewalls and anti-virus are enough to protect my computer.

- My backups work even if I don’t restore them.

- I don’t need to check for that error, it’ll never happen.

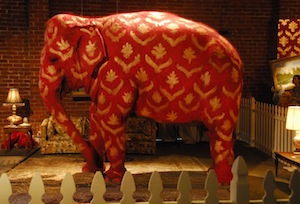

Your assignment for this month is to write about a big assumption you encounter at work, one that people are uncomfortable talking about. Every team has an elephant in the room.

What happens if these big guesses aren’t true?

Housekeeping

A few rules to follow when participating:

- Your post must be published between 00:00 PDT Tuesday, July 8th, 2014, and 00:00 PDT Wednesday, July 9, 2014.

- Your post must contain the T-SQL Tuesday logo from above and the image should link back to this blog post.

- Trackbacks won’t work, so please tweet a link to me (@DevNambi) or send an email (me at devnambi dot com).

Some optional (and highly encouraged) things to also do:

- Include a reference to T-SQL Tuesday in the title of your post

- Tweet about your post using the hash tag #TSQL2sDay

- Consider hosting T-SQL Tuesday yourself. Adam Machanic keeps the list.

About T-SQL Tuesday

T-SQL Tuesday was started by Adam Machanic ( Blog | @AdamMachanic ) in 2009. It’s a monthly blog party with a rotating host, who is responsible for providing a new topic each month. In case you’ve missed a month or two, Steve Jones ( Blog | @way0utwest ) maintains a complete list for your reading enjoyment.

Results

This July’s T-SQL Tuesday is now over. Well over a dozen people contributed their insight and wisdom, and they’re well worth a read.

Permalink